A/B testing is sort of like an American Idol audition. Everyone thinks they’re great at it. Few actually are.

I’m sorry to tell you, but you’re probably A/B testing wrong.

And you’re not alone. It’s shocking to see how many mistakes even well-known and respected marketers make in regards to testing.

And that’s a big problem when the results of those tests are driving your marketing. Fortunately, each of the mistakes below is easily correctable once you recognize what you’re doing wrong.

Mistake #1: You end your tests too soon

Technology has made testing easier in a lot of ways. Today’s solutions can automate most of the process.

Tools like Optimizely and Visual Website Optimizer can save you a ton of time and effort. But like any tool, they are only as good as the person using it.

It’s easy to look at the early results of a test and draw a conclusion. Take this example from Peep Laja of Conversion XL.

What would you do if this was your test? Call the control a winner and start implementing the learnings throughout your site?

Image source: Conversion XL

That’s what most would do. After all, it says right there that the test version has a 0% chance of beating the control.

A couple of weeks later, the results looked quite different.

Image source: Conversion XL

That’s right. The version that had no chance of winning was now significantly outperforming the control.

What’s going on here? How can that be?

Scroll back up and look at the sample size again. A little over 100 visitors isn’t enough to get valid results.

So what size do you need?

That depends on a few factors, such as your conversion rate, minimum detectable effect and acceptable margin of error. But the answer is probably higher than you think.

Plugging your numbers into a sample size calculator such as this or this one is the easiest way to determine the minimum sample size you’ll need.

But this isn’t the only reason to keep your tests running.

Timing is also a variable that could skew results. Some businesses see conversion rates vary significantly between days of the week. There could also be events or other external factors that temporarily skew results one way or the other.

There are also random anomalies.

This simulation shows how even A/A tests (where both variants are identical) can deliver false positives up to 25% of the time.

By letting your tests run for at least a few weeks, any anomalies should even out.

Mistake #2: You’re making assumptions

You’d think this would be the last thing you’d see on a list of testing mistakes. After all, the whole reason for testing is to avoid assumptions and act on facts.

But even the experts sometimes fall into this trap.

Take this example from the Unbounce blog.

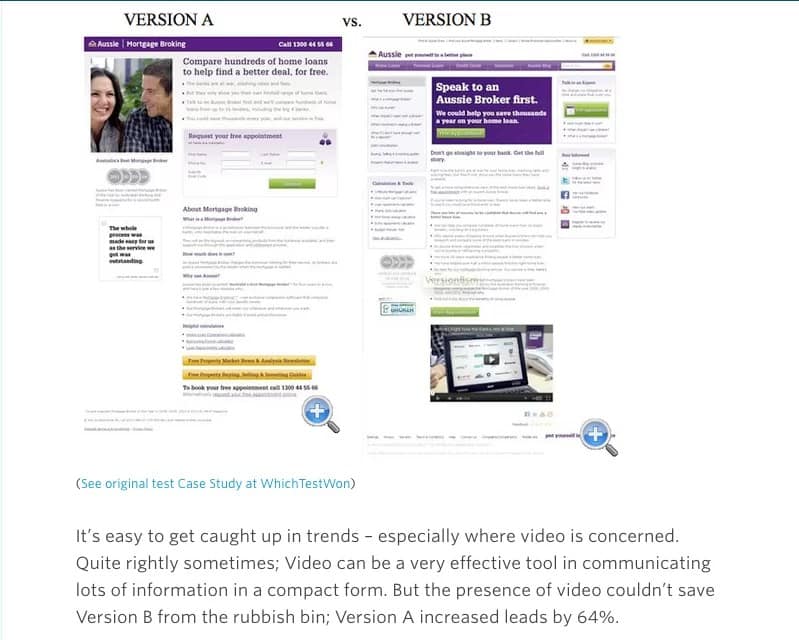

The article makes it sound like it’s testing the use of video. But that’s not the only difference between the versions.

My hypothesis would be that the headline in the winning version had a bigger impact. That’s the first thing that jumps out at me. (Although I’m a copywriter so words are kind of my thing.)

If you want to learn definitively how different components affect response, you need to isolate them.

Don’t get me wrong. There’s nothing wrong with testing drastically different creatives against each other. In many cases, it’s a better choice than testing a single component, because it offers a greater chance of a significant lift.

Just be aware what the test is: The sum of the differences, not each individual variation.

Mistake #3: You’re testing the wrong things

We’ve all seen articles that highlight results like this:

Image source: Leadpages

It’s pretty exciting. So it’s understandable to want to rush out and test it on your own site.

Here’s why you shouldn’t.

First, trying to replicate a test you read about is a spitball approach. There’s no strategy behind it. You’re just making changes to see what sticks.

Smart testing starts with a valid hypothesis. And no, “this worked for another company so it might work for us” is not a valid hypothesis.

A hypothesis should start with some sort of insight or logic. For example, if customer interviews reveal a common pain point or purchase driver, you might hypothesize a headline that speaks to that would perform better than the control headline.

Even smaller tests should include an educated rationale, such as “using a color with more contrast for the CTA button should help draw more attention to what we want visitors to do.”

Second, and even more importantly, small changes usually only lead to small wins. To get big wins, you need to test the big things first. For most of us, changing the color of a button just isn’t going to make a huge difference. Wasting your time on that deprives you of other important learning opportunities.

Remember, accurate testing requires a large sample size. And there’s only so much traffic to go around.

A framework such as PIE or PXL can help you prioritize your hypotheses and ensure you’re getting the most out of your testing.

Mistake #4: You’re missing the big picture

It’s easy to get tunnel vision when you’re looking at A/B test results. After all, you’re supposed to isolate variations so you know exactly how a change impacts your conversion rate.

The problem is not in the testing itself, though. It’s in how you interpret and implement those results.

Let’s use a hypothetical example.

Say you’re testing two different offers for your landing page; a free trial and a free demo.

Version A, the trial, gets a response of 3.2%. Version B, the demo, gets 5.6%.

Based on those results Version B is the clear winner. Would you say that Version B is the better offer for your business? Would you roll it out across your site?

Maybe the demo is better. But maybe it’s not?

What if the leads from Version B mostly fail to purchase afterward, while a good portion of Version A’s become paying customers?

Or what if the leads that do become customers from the demo churn at a higher rate than the trial?

Would you still say Version B is the better offer?

Here’s another example.

Let’s say you have an Adwords campaign running, and your headline is “Start Testing in Minutes.”

Now let’s say that ad leads to a landing page where you’re running an A/B test on the headline.

Version A is: “Start Testing in Minutes with This Point-and-Click A/B Testing Tool”

Version B is: “Increase Conversions with This Point-and-Click A/B Testing Tool”

Version A wins pretty handily. So you should use that approach on all your pages, right?

Not necessarily. Sure, A is the winner here. But you need to consider the factors beyond the page. Look at the headline of the ad that drove people to that page again.

Version A is the better match. It gives the people the information they expect, moving them seamlessly through the journey.

Version B on the other hand is a change in direction. It breaks the flow, killing momentum and conversions.

But what if the Adwords headline matched Version B closer? The results would almost assuredly be different.

Mistake #5: You treat testing as a one-step process

After declaring a winner, what do you do? I bet you roll out the winning version and move on to something else. But then you’re missing out on valuable insights.

One of the first things you should do is share the results with the rest of your team. I can’t tell you how many times in my own career some useful nugget has come to light after a project was finished or well under way.

You also want to apply what you learn elsewhere. Try to replicate the results with other segments. Use it to form new hypotheses. Build on those tests by further refining your most important pages. Optimization is an iterative process.

Finally, make it a point to revisit your tests periodically. Things change rapidly in today’s world and what’s working now might not be the best option in a year or two.

Conclusion

If you’re making any of the mistakes I’ve just described, don’t feel bad. Most marketers make at least one, even the ones from successful companies.

The good news is that now you know what you were doing wrong and you can correct course. Here’s the first steps you can take today:

- Prioritize your testing ideas. Throw out any that aren’t based on a solid hypothesis. Then rank the remaining ideas based on their potential impact.

- Predetermine the sample size you’ll need to get statistically valid results. Here are the links to the calculators again: https://www.optimizely.com/resources/sample-size-calculator and www.evanmiller.org/ab-testing/sample-size.html.

- Figure out how you’re going to set up your test. Be sure to map out factors outside of the component you’re testing and isolate only the variables that prove or disprove your hypothesis.

Let me know how it goes in the comments section below.

Feature image by Alex Proimos

Leave a Reply